Uzone.id – Apple has introduced a new feature for iOS 18 and iPadOS 18 users called Eye Tracking. As the name suggests, this feature allows users to control their iPhones or iPads simply by using their eye movements.

Familiar to Android Users

While this feature might seem familiar to Android users, as it has been available on some Android smartphones like Oppo and Realme Air Gesture for quite some time, Apple’s implementation is not merely a gimmick. Apple states that Eye Tracking is designed to assist users with physical disabilities, enabling them to control their iPads or iPhones with their eyes.

“We believe deeply in the transformative power of innovation to enrich lives,” said Tim Cook, Apple’s CEO. “That’s why for nearly 40 years, Apple has championed inclusive design by embedding accessibility at the core of our hardware and software. We’re continuously pushing the boundaries of technology, and these new features reflect our long-standing commitment to delivering the best possible experience to all of our users.”

Sarah Herrlinger, Apple’s senior director of Global Accessibility Policy and Initiatives

Added, “Each year, we break new ground when it comes to accessibility. These new features will make an impact in the lives of a wide range of users, providing new ways to communicate, control their devices, and move through the world.”

iOS 18 and iPadOS 18 is powered By AI

So, Eye Tracking in iOS 18 and iPadOS 18 is powered by artificial intelligence (AI) to provide users with a built-in option to navigate their iPhones and iPads using only their eye movements. The feature utilizes the selfie camera to detect eye movements, and calibrates in seconds, and its capabilities are continually improved through built-in machine learning.

Apple also ensures that all user settings are securely stored on the device and not shared with Apple or any third party. This is why users must recalibrate their gaze in Eye Tracking each time the feature is turned off and on.

What Is The Use Of EYE TRACKING ?

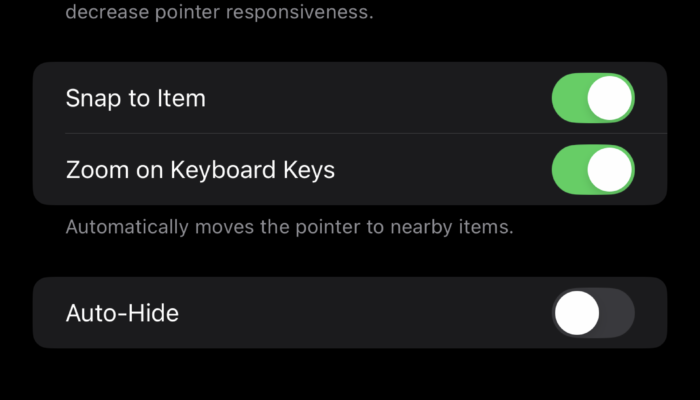

With Eye Tracking, Apple has introduced an innovative way for users to interact with their iPhones and iPads. This cutting-edge feature enables users to “press” buttons or trigger certain functions just by focusing their gaze. Known as Dwell Control, this feature automatically activates a button or performs an action when the user’s gaze lingers on a specific part of the screen or app for a set period of time.

What’s truly fascinating is that Eye Tracking works seamlessly across all apps on iPadOS and iOS—without the need for any additional hardware or accessories. Now, users can effortlessly navigate through app elements, activate buttons, and perform gestures such as swipes, all simply by using their eyes.

However, it’s important to note that while this feature is available in iOS 18 and iPadOS 18, not all devices support Eye Tracking. Let’s take a look at which devices are compatible with this exciting new technology:

- iPhone SE 3

- iPhone 12

- iPhone 12 Mini

- iPhone 12 Pro

- iPhone 12 Pro Max

- iPhone 13

- iPhone 13 Mini

- iPhone 13 Pro

- iPhone 13 Pro Max

- iPhone 14

- iPhone 14 Plus

- iPhone 14 Pro

- iPhone 14 Pro Max

- iPhone 15

- iPhone 15 Plus

- iPhone 15 Pro

- iPhone 15 Pro Max

- iPhone 16

- iPhone 16 Plus

- iPhone 16 Pro

- iPhone 16 Pro Max

- iPad Mini (generasi ke-6)

- iPad (generasi ke-10)

- iPad Air (generasi 4 ke atas)

- iPad Air M2

- iPad Pro 11 inci (generasi 3 ke atas)

- iPad Pro 11 M4

- iPad Pro 12,9 inci (generasi 3 ke atas)

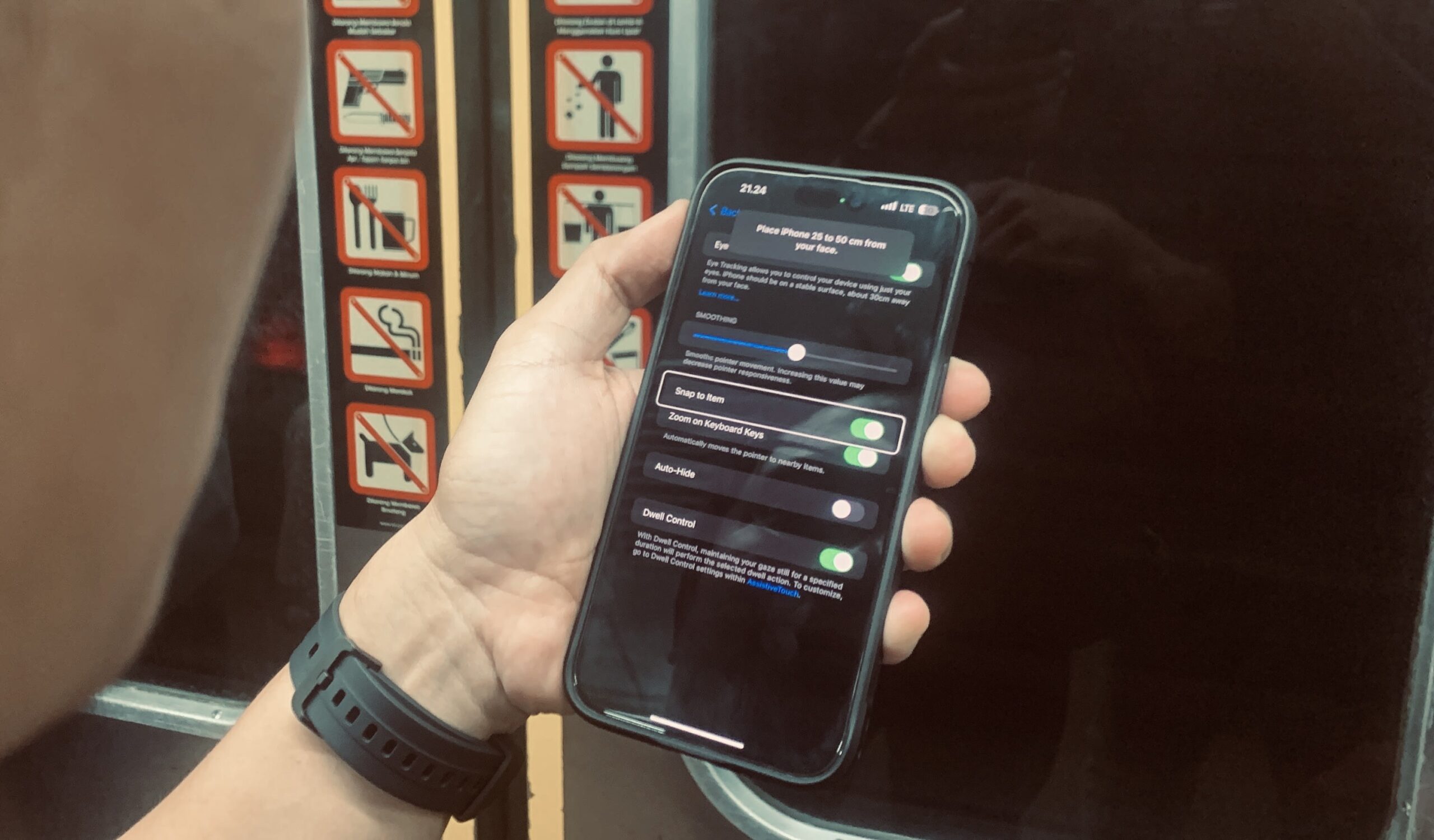

How to activate Eye Tracking on iPhone:

- Go to Settings, then Accessibility.

- Select Eye Tracking and toggle it on.

- Follow the instructions for the eye calibration process by following the colored dots that appear on the screen.

- Once calibration is complete, you can use Eye Tracking to control your iPhone.

Hope it is useful!

When you look at an item on the screen, an outline will appear around it. To press a button or confirm a selection, stare at the item for a few moments until a pointer or gray dot appears.